HomoGCL: Rethinking Homophily in Graph Contrastive Learning

|

1CSE, SYSU

|

2AI Thrust, HKUST (GZ)

|

3CSE, HKUST

|

Abstract

|

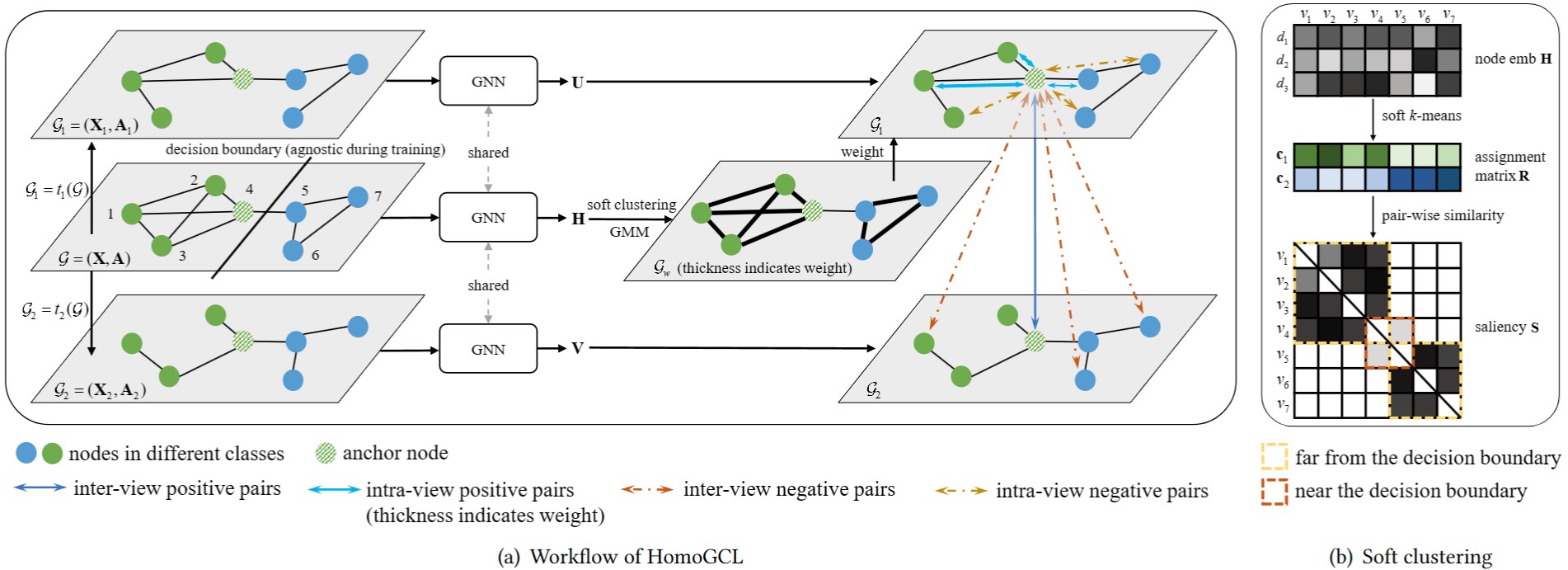

Contrastive learning (CL) has become the de-facto learning paradigm in self-supervised learning on graphs,

which generally follows the "augmenting-contrasting" learning scheme.

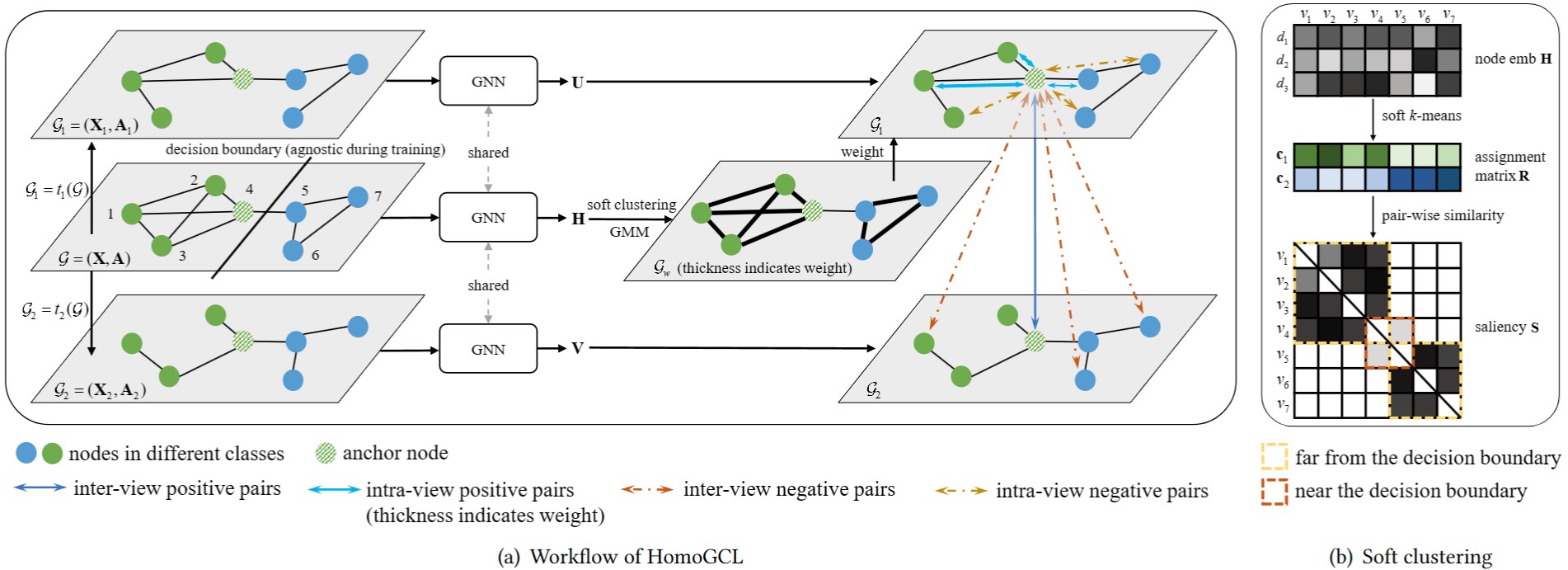

However, we observe that unlike CL in computer vision domain, CL in graph domain performs decently even without

augmentation.

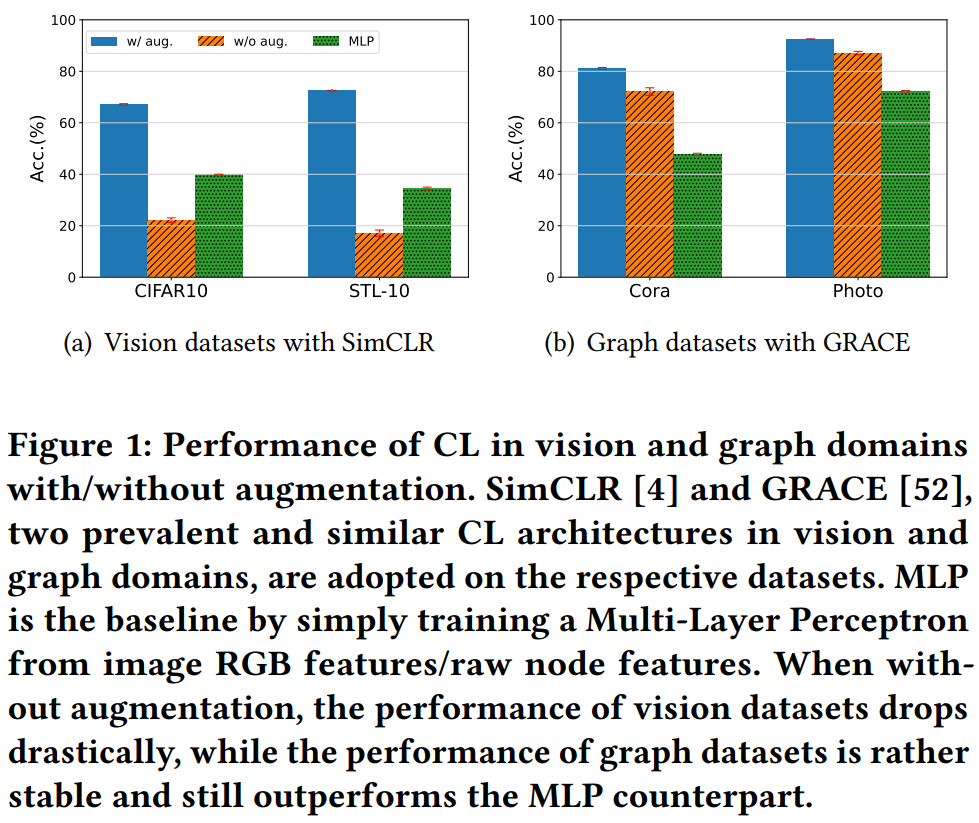

We conduct a systematic analysis of this phenomenon and argue that homophily, i.e., the principle that "like

attracts like",

plays a key role in the success of graph CL. Inspired to leverage this property explicitly,

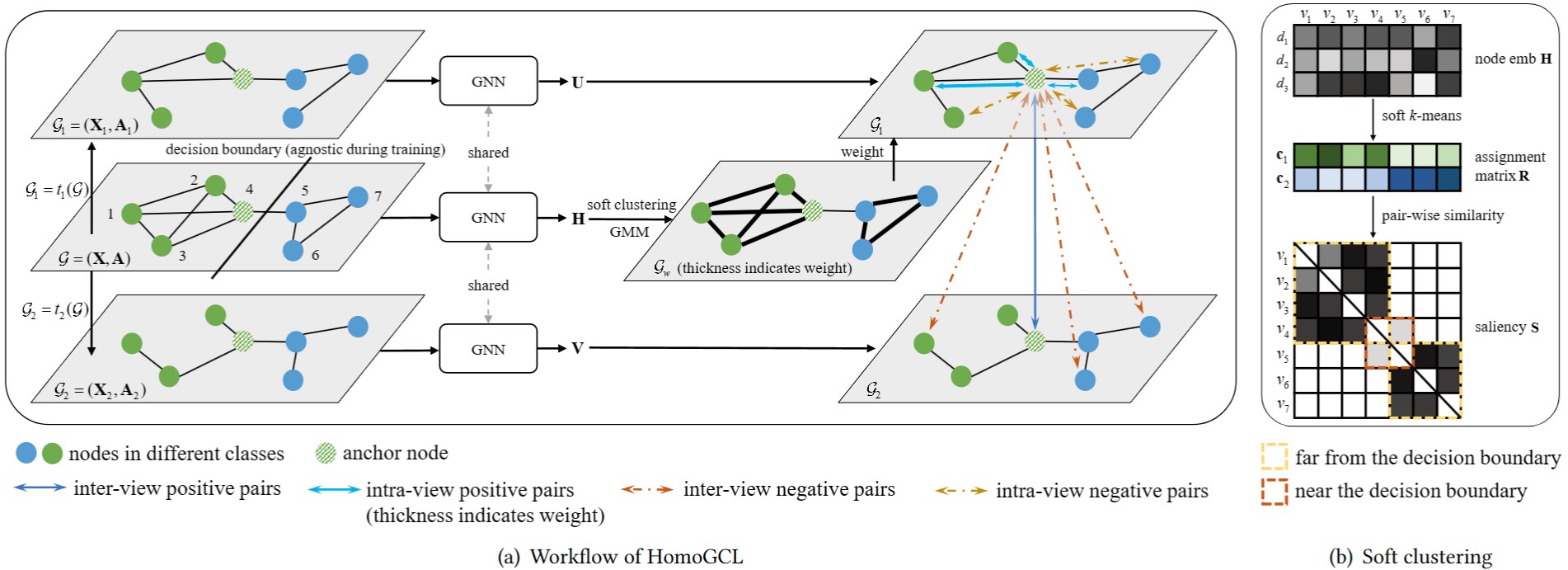

we propose HomoGCL, a model-agnostic framework to expand the positive set using neighbor nodes with

neighbor-specific significances.

Theoretically, HomoGCL introduces a stricter lower bound of the mutual information between raw node features and

node embeddings in augmented views.

Furthermore, HomoGCL can be combined with existing graph CL models in a plug-and-play way with light extra

computational overhead.

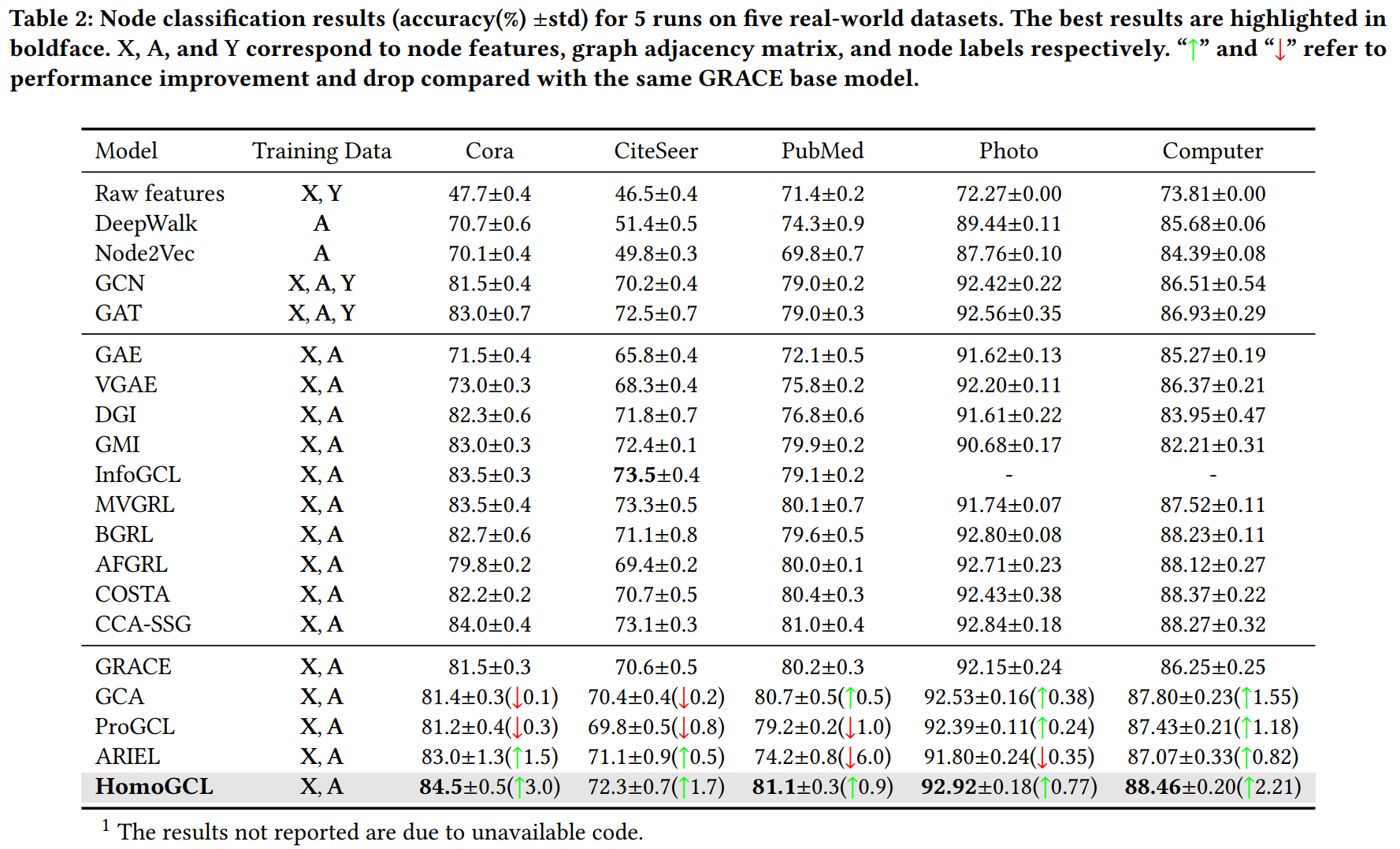

Extensive experiments demonstrate that HomoGCL yields multiple state-of-the-art results across six public datasets

and consistently brings notable performance improvements when applied to various graph CL methods.

KDD 2023 Talk

Highlights

(1) Difference between GCL and VCL when without augmentation

(2) Empirical study on graph homophily

(3) Node classification results

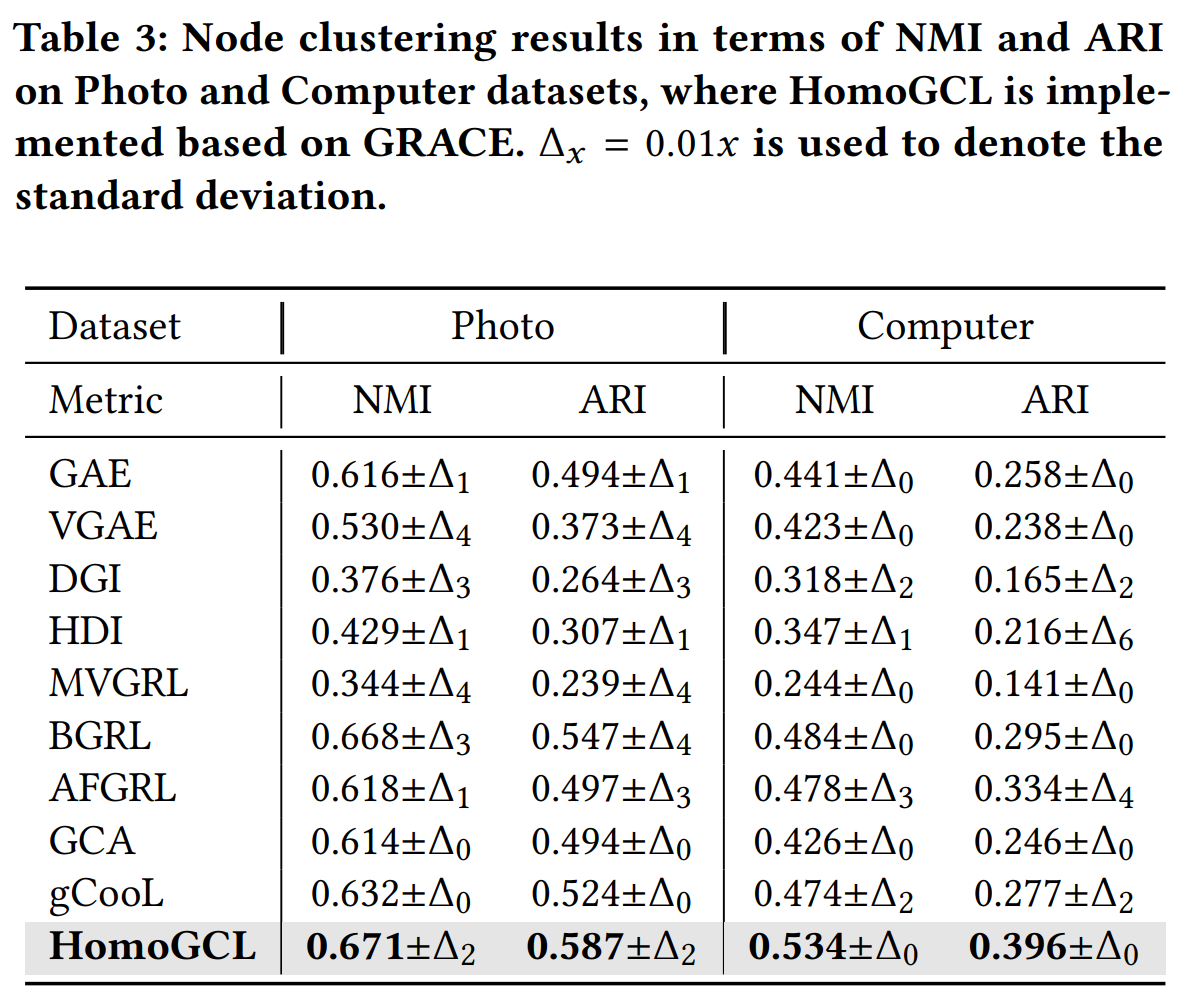

(4) Node clustering results

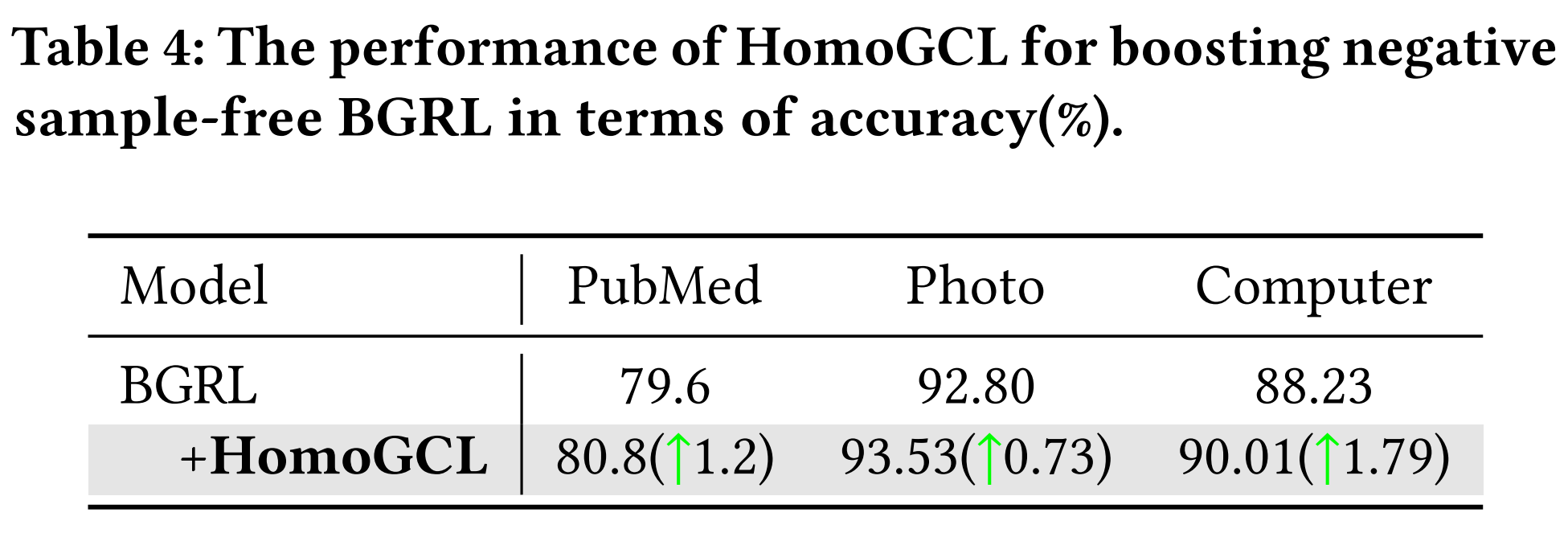

(5) Boosting other GCL

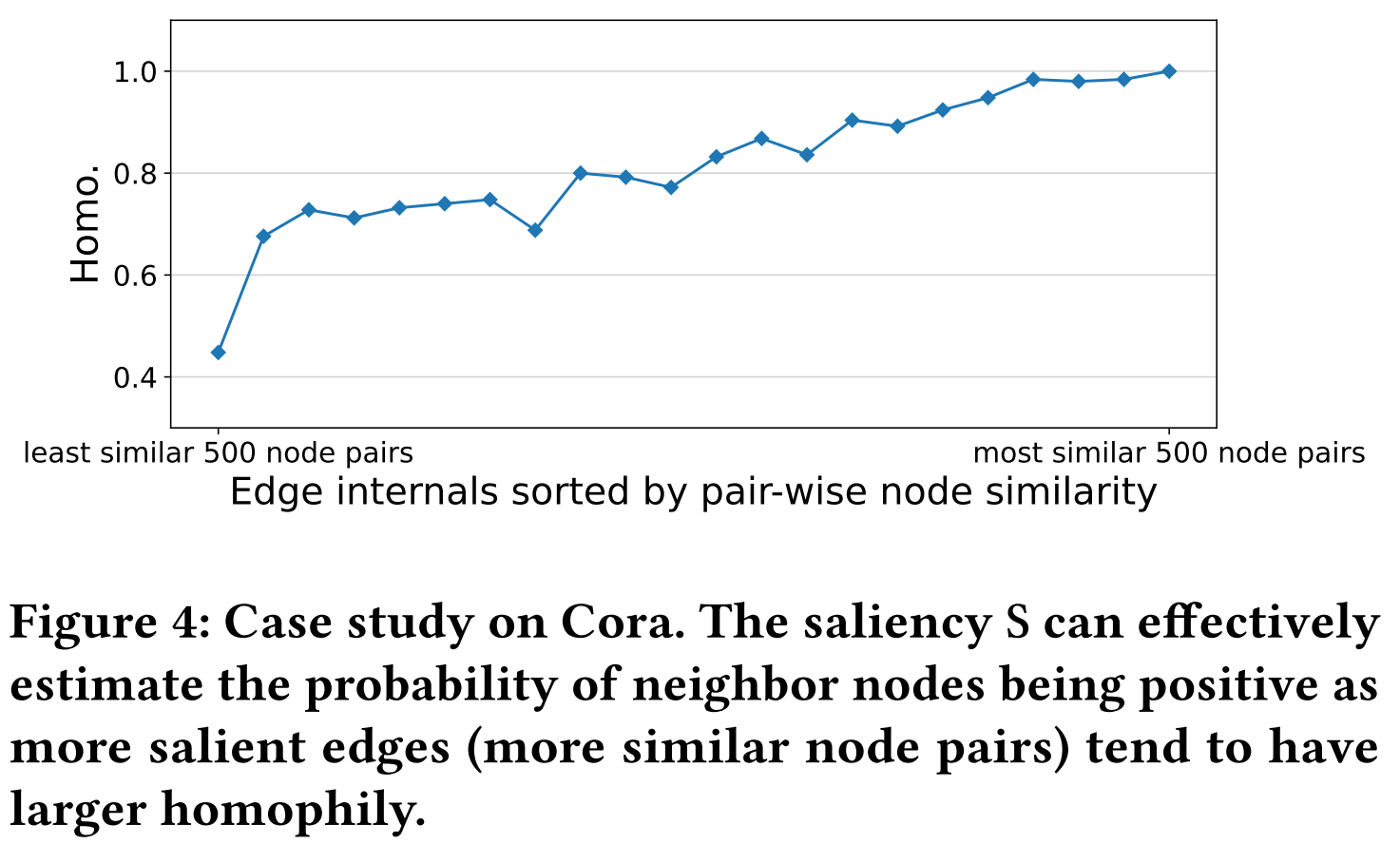

(6) Effectiveness of saliency

Citation

@inproceedings{li2023homogcl,

title={Homogcl: Rethinking homophily in graph contrastive learning},

author={Li, Wen-Zhi and Wang, Chang-Dong and Xiong, Hui and Lai, Jian-Huang},

booktitle={Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining},

pages={1341--1352},

year={2023}

}